Advertisement

Despite the rise of AI tools, developers recognize that AI, like ChatGPT, functions more as a co-pilot than a driver in coding. While it can assist with generating code and offering suggestions, it struggles with complex, creative, and logic-heavy tasks. Programming goes beyond syntax—it involves systems thinking, debugging, collaboration, and abstract reasoning, areas where human judgment still excels.

This article explores the coding tasks ChatGPT can't handle, helping developers understand when to rely on AI and when human expertise is irreplaceable. Recognizing these gaps can guide developers in making informed decisions during the coding process.

While ChatGPT can assist with many programming tasks, there are specific areas where its capabilities still fall short, relying on human expertise instead.

ChatGPT can explain error messages and even rewrite broken snippets of code, but it can’t walk through a real, live debugging session in your IDE with actual runtime variables. Real-time debugging isn’t about spotting typos; it’s about context. You have environment-specific variables, unlogged states, race conditions, and interactions between different libraries or APIs.

For example, suppose your Node.js app throws an error only when deployed to a production server with a specific OS-level configuration. In that case, ChatGPT won't have access to logs, memory, or active service responses to help. Even if you describe the situation in detail, the AI’s response is based on pattern-matching similar cases from its training data—not from live inspection or real sensory feedback from the running system.

That’s one of the biggest AI limitations in programming—it’s blind to the living, breathing state of your application. It can theorize based on symptoms, but it can't observe or manipulate the current environment directly.

Designing software architecture is far more complex than writing a script, and ChatGPT struggles with this challenge. While it can suggest folder structures, explain concepts like microservices, and generate boilerplate code, architecture involves making nuanced decisions under multiple constraints. These constraints include scaling, latency, team skill sets, integration with legacy systems, and more.

For example, if asked to design a scalable SaaS platform, ChatGPT might provide a general structure. However, it won’t be able to weigh critical trade-offs, such as choosing between database isolation or schema-level isolation or selecting the right caching layer for specific access patterns. These decisions require judgment based on company goals, resource limitations, and long-term scalability.

These are decisions where human architects excel—decisions rooted in experience, context, and intuition. ChatGPT cannot simply make these informed choices, highlighting another key coding task it can't perform.

One of the biggest limitations of ChatGPT is its inability to invent new algorithms or apply creative reasoning to complex problems. While it can generate known algorithms like Dijkstra’s or Bubble Sort, when faced with more intricate tasks—such as designing an algorithm for modeling overlapping schedules in a time-variant weighted graph—it often relies on vague or repetitive solutions.

Algorithm design requires more than pattern recognition; it demands lateral thinking. Human developers combine domain knowledge with creative problem-solving, testing, and iterating based on insights, not just past examples. ChatGPT, however, cannot take these intuitive leaps. It cannot imagine edge cases, prototype in a sandboxed environment, or analyze performance over time.

This gap highlights AI limitations in programming, especially when projects move beyond simple logic into unstructured, creative problem-solving. Even junior developers, with a bit of elbow grease and testing, can outpace ChatGPT in these areas.

Another zone where ChatGPT hits a ceiling is real-time team collaboration. Coding isn't done in a vacuum. It happens in messy Git branches, sprint backlogs, daily standups, and Slack threads that are full of context. Humans code for other humans, not just for compilers.

ChatGPT doesn't understand the subtle art of naming functions for clarity, documenting for teammates, or responding to a code review with a better refactor. It doesn't feel frustrating when a PR gets rejected or makes smart compromises when deadlines loom.

While it can help you code, it can’t help a team collaborate. That means it can’t replace product discussions, cross-functional feedback, or shared intuition about what “good enough” means in a given sprint. Even in pair programming, ChatGPT lacks the shared emotional bandwidth and real-time understanding of evolving team decisions.

This is perhaps one of the most underestimated coding tasks ChatGPT can’t do—being part of the human loop. Not just writing code but also participating in the why and how behind it.

One more place where the limitations surface is in the long-term evolution of a codebase. AI can generate new code fast, but it struggles with maintaining older projects over time. Refactoring code isn't just about reducing lines—it's about understanding architectural goals, technical debt, usage patterns, and upcoming feature changes.

Ask ChatGPT to refactor a legacy app written in spaghetti PHP with hidden dependencies and inconsistent patterns, and it might return cleaner syntax—but it is not a sustainable solution. It can't assess module dependencies, measure the real-world impact of a code change, or decide whether to rewrite or patch.

This task requires deep familiarity with both the codebase and the context in which it lives—something AI can’t replicate unless it’s been inside that codebase for months, reviewing commits and understanding evolution. And even then, it would still need human direction. That's another dimension of AI limitations in programming that often gets glossed over in AI hype discussions.

While ChatGPT is a powerful tool for generating code and assisting with simple tasks, it has significant limitations when it comes to complex problem-solving. It struggles with real-time debugging, collaborative coding, algorithm design, and long-term code ownership. The nuanced decision-making required in software architecture and creative coding tasks is where human expertise remains indispensable. Recognizing these gaps allows developers to use AI effectively while knowing when to rely on their skills for more intricate and dynamic aspects of programming.

Advertisement

Looking for the best Airflow Alternatives for Data Orchestration? Explore modern tools that simplify data pipeline management, improve scalability, and support cloud-native workflows

Stay updated with AV Bytes as it captures AI industry shifts and technological breakthroughs shaping the future. Explore how innovation, real-world impact, and human-centered AI are changing the world

Need to update your database structure? Learn how to add a column in SQL using the ALTER TABLE command, with examples, constraints, and best practices explained

How to use MongoDB with Pandas, NumPy, and PyArrow in Python to store, analyze, compute, and exchange data effectively. A practical guide to combining flexible storage with fast processing

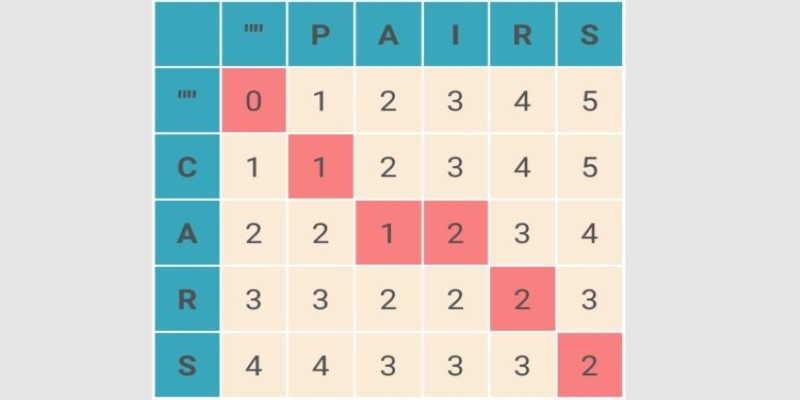

What Levenshtein Distance is and how it powers AI applications through string similarity, error correction, and fuzzy matching in natural language processing

How the ElevenLabs API powers voice synthesis, cloning, and real-time conversion for developers and creators. Discover practical applications, features, and ethical insights

Simpson’s Paradox is a statistical twist where trends reverse when data is combined, leading to misleading insights. Learn how this affects AI and real-world decisions

Gen Z embraces AI in college but demands fair use, equal access, transparency, and ethical education for a balanced future

Volkswagen introduces its AI-powered self-driving technology, taking full control of development and redefining autonomous vehicle technology for safer, smarter mobility

Accessing Mistral NeMo opens the door to next-generation AI tools, offering advanced features, practical applications, and ethical implications for businesses looking to leverage powerful AI solutions

Learn how process industries can catch up in AI using clear steps focused on data, skills, pilot projects, and smart integration

Gemma Scope is Google’s groundbreaking microscope for peering into AI’s thought process, helping decode complex models with unprecedented transparency and insight for developers and researchers