Advertisement

The way companies manage data has changed rapidly in recent years. Businesses now depend on complex workflows to process information, automate tasks, and support decision-making. Apache Airflow became a popular tool for managing these workflows, offering a fresh approach with its DAG-based pipelines and Python-powered flexibility. However, as technology evolves, many organizations seek Airflow alternatives for data orchestration that offer faster deployment, better scalability, or simpler configurations.

No single tool fits every scenario, so exploring other options is essential. Today's businesses need solutions built for modern data challenges — from event-driven workflows to cloud-native designs — searching for alternatives that are more relevant than ever.

Airflow has many strengths, but it also comes with limitations that prompt teams to explore other tools. Its reliance on Python can be a strength for developers, but it may create barriers for non-technical users. Additionally, Airflow’s scheduler is built around batch processing and isn’t naturally suited for event-driven architectures. Managing and scaling Airflow in large environments can become resource-intensive, especially when dealing with real-time data pipelines or microservices-based architectures.

For cloud-first organizations, Airflow's configuration-intensive approach may seem dated next to certain serverless or completely managed orchestration tools. Its absence of inherent support for cutting-edge data operations like streaming, complex event processing, or distributed state management may also narrow its applications.

Finally, the search for Airflow alternatives for data orchestration begins when teams find that their data workflows are either slowing down or becoming too cumbersome to manage properly. Flexibility, usability, and compatibility with new cloud infrastructure are the prime movers for taking on new tools.

A growing list of tools has positioned itself as either direct or partial competitors to Airflow. Each brings a unique philosophy, feature set, and design that can better suit particular types of data workflows.

Prefect is a leading choice among Airflow alternatives for data orchestration because of its clean design and hybrid execution model. It reduces boilerplate code, making workflow development faster and more efficient. Prefect supports running tasks locally, on remote machines, or within Kubernetes, giving teams flexibility in managing their pipelines.

It also addresses a major Airflow limitation by supporting event-driven workflows. Prefect’s focus on observability, fault tolerance, and easy scalability makes it a modern and practical solution for evolving data environments.

Dagster offers a different philosophy in data orchestration by emphasizing software-defined assets and type-checked pipelines. This approach makes data workflows more predictable, structured, and reliable. Unlike Airflow, Dagster doesn’t just manage tasks—it validates data and ensures integrity throughout pipelines.

Its built-in support for data dependency management makes debugging and maintenance easier. Dagster is a strong alternative for teams that need precise control over data flows, reliable validation mechanisms, and clear visibility into their data lifecycle within complex environments.

Mage is a newer platform that focuses on user-friendly data orchestration. It simplifies the process of building, monitoring, and managing workflows with an interactive UI and straightforward deployment. Mage is ideal for small teams looking for quick adoption without a steep learning curve.

Additionally, managed services like AWS Step Functions and Google Cloud Workflows offer fully scalable solutions for teams already using these cloud platforms. These tools eliminate infrastructure management challenges, providing an easy path to build, execute, and scale workflows within their ecosystems.

Argo Workflows is designed specifically for Kubernetes, making it one of the best Airflow alternatives for data orchestration in cloud-native systems. It manages workflows as Kubernetes-native resources, allowing each task to run inside its container. This containerized execution model reduces dependency conflicts and enhances scalability.

Argo’s support for YAML-defined workflows aligns well with infrastructure-as-code practices. It is especially powerful for organizations operating microservices or distributed systems, offering high flexibility, efficient resource management, and seamless integration with modern cloud-based development environments.

The process of selecting the best Airflow alternative for data orchestration depends heavily on an organization’s specific use case, existing infrastructure, and technical expertise. Teams that prefer a fully managed solution with deep cloud integration might lean toward AWS Step Functions or Google Cloud Workflows. Their tight coupling with cloud services reduces operational overhead but can create vendor lock-in.

Organizations with Kubernetes expertise and containerized workloads might prefer Argo Workflows for its cloud-native capabilities. It supports high scalability and offers better control over resource allocation.

For teams that require flexibility, simplicity, and a hybrid execution model, Prefect often presents the most balanced choice. It retains Airflow's Python-based familiarity while addressing many of its pain points.

Dagster shines in environments where data integrity and asset management are critical. Its focus on software-defined assets ensures better visibility into how data flows across different systems, making debugging and testing easier.

Mage is an appealing choice for smaller teams or companies new to data orchestration. Its intuitive interface and fast setup reduce the barriers to entry, making it easy for non-expert users to build pipelines.

It's also essential to consider operational complexity, licensing costs, community support, and the availability of managed hosting solutions. Some tools, like Prefect and Dagster, offer managed cloud versions, allowing teams to offload infrastructure concerns.

Evaluating the trade-offs between control, ease of use, scalability, and cost will guide organizations toward the most appropriate Airflow alternative for data orchestration.

The landscape of Airflow alternatives for data orchestration is expanding rapidly, offering businesses new ways to manage complex workflows with greater flexibility and efficiency. Tools like Prefect, Dagster, Argo Workflows, and Mage each bring unique strengths to the table, while cloud-native solutions simplify infrastructure management. Choosing the right alternative depends on your specific needs, technical environment, and long-term goals. As data-driven operations continue to grow, adopting the right orchestration tool can transform how organizations handle their workflows and scale their systems.

Advertisement

How self-driving tractors supervised remotely are transforming AI farming by combining automation with human oversight, making agriculture more efficient and sustainable

A former Pennsylvania coal plant is being redeveloped into an artificial intelligence data center, blending industrial heritage with modern technology to support advanced computing and machine learning models

How the Chain of Verification enhances prompt engineering for unparalleled accuracy. Discover how structured prompt validation minimizes AI errors and boosts response reliability

How the AI Robotics Accelerator Program is helping universities advance robotics research with funding, mentorship, and cutting-edge tools for students and faculty

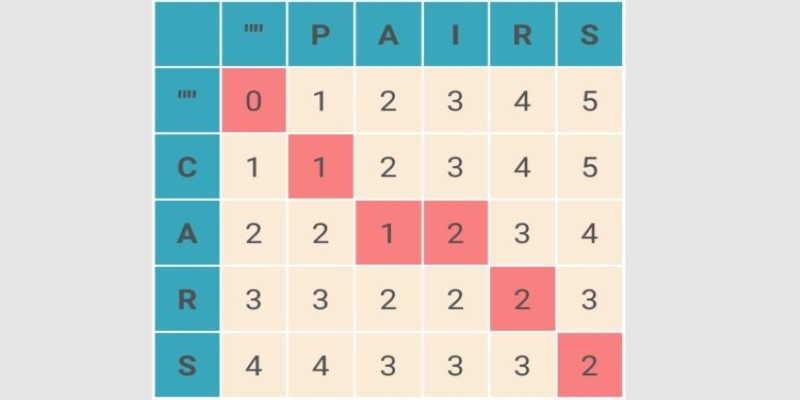

What Levenshtein Distance is and how it powers AI applications through string similarity, error correction, and fuzzy matching in natural language processing

Few-Shot Prompting is a smart method in Language Model Prompting that guides AI using a handful of examples. Learn how this technique boosts performance and precision in AI tasks

What Hannover Messe 2025 has in store, from autonomous robots transforming manufacturing to generative AI driving innovation in industrial automation

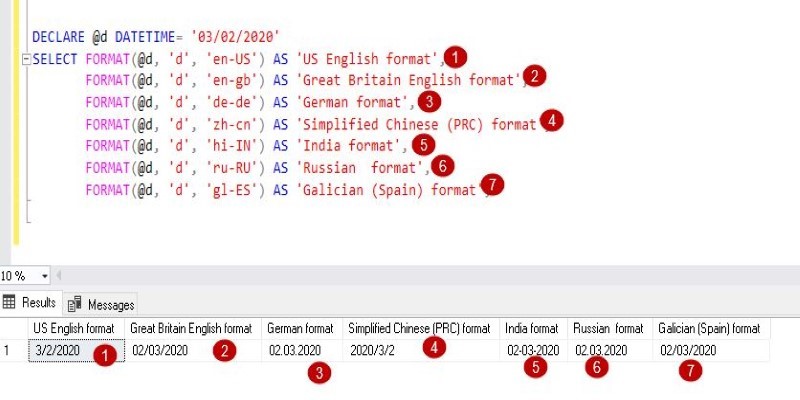

The FORMAT() function in SQL transforms how your data appears without changing its values. Learn how to use FORMAT() in SQL for clean, readable, and localized outputs in queries

Understand how logarithms and exponents in complexity analysis impact algorithm efficiency. Learn how they shape algorithm performance and what they mean for scalable code

Confused between Data Science vs. Computer Science? Discover the real differences, skills required, and career opportunities in both fields with this comprehensive guide

Looking for the best Airflow Alternatives for Data Orchestration? Explore modern tools that simplify data pipeline management, improve scalability, and support cloud-native workflows

Gen Z embraces AI in college but demands fair use, equal access, transparency, and ethical education for a balanced future