At this year’s Mobile World Congress 2025 in Barcelona, Fujitsu drew widespread attention with an unsettling yet fascinating glimpse into the future of public safety. The Japanese tech company unveiled an artificial intelligence platform designed to predict crime by analyzing biometric and behavioral data in real time. It is not just another security camera upgrade or a smarter sensor.

Fujitsu’s system claims to spot potential criminal intent before a crime even takes place. By combining AI-powered biometrics with advanced pattern recognition, the company proposes a new approach to urban safety—one that inevitably raises questions about ethics, accuracy, and privacy.

Fujitsu demonstrated a working prototype of its AI-powered biometrics platform at its pavilion, drawing long lines of visitors curious to see the technology in action. The system relies on a network of high-resolution cameras, thermal sensors, and biometric scanners placed throughout a monitored area. Unlike conventional surveillance, which records and analyzes incidents after the fact, this AI operates in real-time.

The cameras capture facial expressions, micro-gestures, posture, gait, and even subtle physiological signals, such as changes in heart rate or skin temperature. These are then fed into an AI model trained on millions of anonymized data points, looking for patterns historically associated with aggressive or suspicious behavior. The company claims the AI can flag individuals who exhibit signs of heightened stress, concealed intent, or erratic movements, alerting human monitors to intervene before violence or theft occurs.

What sets this system apart from older predictive policing efforts is the granularity of its biometric inputs. Beyond mere location-based crime statistics, it draws from real-time human signals, offering situational awareness down to the individual. Fujitsu engineers at the booth explained that the system can be integrated with existing CCTV infrastructure or deployed as a stand-alone solution, with cloud-based analytics providing continuous updates to law enforcement agencies or private security teams.

The demonstration at Mobile World Congress focused on use cases for crowded urban spaces, stadiums, airports, and train stations—places where incidents can escalate quickly if not managed in time. Attendees watched as volunteers acted out common scenarios such as loitering near an exit or engaging in heated arguments. In several instances, the system accurately identified escalating behaviors moments before the actors simulated an actual assault or robbery.

Fujitsu representatives shared data from pilot programs already underway in two Japanese cities and one European airport. In those early trials, incidents of pickpocketing and physical altercations were reportedly reduced by over 20 percent in monitored zones compared to control areas. The technology has also been designed to help identify individuals who may need assistance rather than pose a threat—someone disoriented or at risk of fainting, for example, could be flagged so staff can check on them promptly.

The company emphasized that its goal is not to replace human security personnel but to support them with earlier warnings and more informed decisions. They argued that the human element remains necessary, as AI systems are not infallible and may misinterpret cultural differences, disabilities, or unique behaviors. In their view, predictive tools are meant to complement, not supplant, trained judgment.

Fujitsu’s unveiling, however, was not without controversy. As news of the system spread during the congress, so did concerns about privacy, bias, and accountability. Many attendees voiced unease about being constantly monitored for hidden signs of wrongdoing, especially since the technology depends on collecting intimate biometric data. Privacy advocates highlighted the risk of misuse in authoritarian settings or against marginalized groups.

The company responded by stressing its commitment to transparency and data protection. According to their engineers, all collected data is anonymized at the source whenever possible, with storage and access governed by stringent security standards. They said their system does not perform racial or gender profiling and is being tested rigorously to reduce false positives, which could lead to unnecessary confrontations or wrongful suspicion.

Legal scholars and ethicists attending the panel discussions warned that existing regulations around public surveillance may not fully account for the capabilities of predictive biometrics. They argued that public debate and updated legal frameworks are needed before widespread adoption of such systems. Questions of who controls the data, how long it’s stored, and what recourse individuals have if flagged unfairly remain open.

The presentation at Mobile World Congress 2025 has reignited a broader conversation about predictive policing technologies in general. Over the past decade, predictive systems have been deployed in various forms, relying mostly on crime statistics, historical trends, and geographic patterns. Fujitsu’s approach represents a significant shift toward individual-level prediction rather than neighborhood-level forecasting.

Proponents argue that smarter surveillance can make cities safer by preventing crime before it happens. Detractors counter that it risks creating a culture of suspicion, where everyone is treated as a potential threat under constant watch. Studies of earlier predictive policing models have already raised concerns about embedded biases, especially against minority communities. Fujitsu's more advanced system raises hopes that better data and more nuanced models can help mitigate those issues, but doubts remain.

For now, the technology remains in the pilot stage, and Fujitsu says it is working with regulators, law enforcement, and civil society to refine its protocols and guidelines. The company hinted at more extensive trials later this year, including in North American and European cities, depending on feedback from this initial rollout.

Fujitsu's AI-powered biometrics, showcased at Mobile World Congress 2025, offer a bold take on public safety by predicting crimes through real-time biometric and behavioral data. Early trials show improved response and fewer incidents, but the system has sparked heated discussions over privacy, fairness, and possible misuse. Its future as a fixture in urban security depends not just on how the technology evolves, but on whether communities can agree on how to balance enhanced safety with protecting personal freedoms and rights.

Gain control over who can access and modify your data by understanding Grant and Revoke in SQL. This guide simplifies managing database user permissions for secure and structured access

How the AI Robotics Accelerator Program is helping universities advance robotics research with funding, mentorship, and cutting-edge tools for students and faculty

Explore a detailed comparison of Neo4j vs. Amazon Neptune for data engineering projects. Learn about their features, performance, scalability, and best use cases to choose the right graph database for your system

Google AI open-sourced GPipe, a neural network training library for scalable machine learning and efficient model parallelism

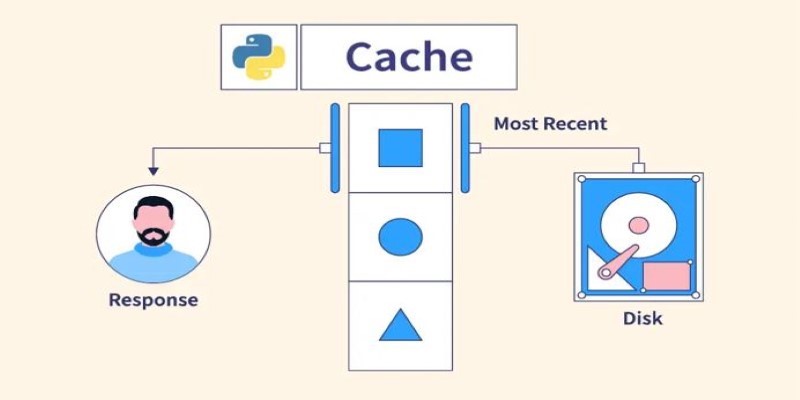

Understand what Python Caching is and how it helps improve performance in Python applications. Learn efficient techniques to avoid redundant computation and make your code run faster

Simpson’s Paradox is a statistical twist where trends reverse when data is combined, leading to misleading insights. Learn how this affects AI and real-world decisions

Find out the key differences between SQL and Python to help you choose the best language for your data projects. Learn their strengths, use cases, and how they work together effectively

How COUNT and COUNTA in Excel work, what makes them different, and how to apply them effectively in your spreadsheets. A practical guide for clearer, smarter data handling

Discover DuckDB, a lightweight SQL database designed for fast analytics. Learn how DuckDB simplifies embedded analytics, works with modern data formats, and delivers high performance without complex setup

Understand the Difference Between Non Relational Database and Relational Database through clear comparisons of structure, performance, and scalability. Find out which is better for your data needs

Understand how TCL Commands in SQL—COMMIT, ROLLBACK, and SAVEPOINT—offer full control over transactions and protect your data with reliable SQL transaction control

Understand how logarithms and exponents in complexity analysis impact algorithm efficiency. Learn how they shape algorithm performance and what they mean for scalable code