Google AI has released a potent tool for developers and academics. GPipe, their neural network training tool, is now open source. Designed for scaling deep learning models, GPipe facilitates effective training across several devices. With minimum code modification, the tool allows quick model parallelism. Researchers working on large-scale machine learning projects will benefit significantly. GPipe streamlines the construction of models that are too big for single devices. It also helps to clear training bottlenecks in deep learning methods.

Optimized for TensorFlow, the system supports a variety of frameworks. Google AI's contribution underscores the rising demand for scalable technologies. The launch fits initiatives to introduce innovative technologies. Google AI asks for community cooperation through open-sourcing GPipe. The library can speed up developments in artificial intelligence across sectors. It works easily with contemporary, scalable machine-learning architectures.

GPipe distinguishes itself with its creative model parallelism approach. It breaks out a big neural network among several accelerators. Every component of the model runs consecutively on a separate device, allowing builders to create even bigger models than standard. The pipeline breaks mini-batches into smaller micro-batches. Every stage runs one after another, handling these batches. Under this approach, the accelerators remain busy and free from delays. It prevents hardware from sitting idle during training phases.

GPipe also works with current deep-learning codes. Developers can include it without needing to rewrite the entire model—just a few tweaks to the model code will do. TensorFlow support guarantees great accessibility for machine learning users. The system might grow over several GPUs or TPUs. Its design permits minimal overhead parallel execution. GPipe lowers memory use while keeping training speed. It is ideal for training high-resolution image or natural language processing models. Such design affects the depth of the models' construction and training process.

GPipe presents a fresh interpretation of model parallelism. It brings pipeline efficiency rather than just data parallelism. Every phase of the model runs on separate devices in order. Once a micro-batch ends stage one, it advances to the next. One batch moves forward; another begins. That overlapping procedure saves money and time. By distributing the model among several machines, it lowers memory consumption. It is conceivable to train models with billions of parameters. GPipe handles both forward and backward passes concurrently. GPUs remain always operational because of this design.

Developers do not have to hand-code device splits. The tool intelligently assigns devices. Its micro-batching system reduces wait times and increases throughput. These advantages result in faster convergence during training. Larger models perform better, increasing the model accuracy. Difficult chores like language modeling become more manageable. Researchers can test new architectures without altering hardware. GPipe brings scalable machine learning architecture greater feasibility than ever before.

Working with massive deep-learning models imposes constraints on developers. GPipe gets several of those obstacles taken down. It enables limited hardware running on huge models. Splitting models and overlapping computations help conserve memory. Training gains speed and efficiency. Major rewrites are not needed in codes. Researchers can expand upon their chosen frameworks and maintain them. Integration doesn't call for sophisticated hardware; it happens quickly. GPipe's adaptability guarantees more acceptance. Usually reserved for major labs, small teams can teach sophisticated models. It creates chances in vision, NLP, and more.

Reduced training costs enable long-term studies to become feasible. Additionally, developers can debug and view pipelines more easily. GPipe tracks device performance and usage metrics with detailed logging. The availability of open sources facilitates community development at a faster speed. Common enhancements help to strengthen the tool even further. A scalable training library helps both academia and industry. Features of GPipe provide sensible, effective means to scaling machine learning implementation.

Google AI's strategic move is toward open-source GPipe. It captures their goal of increasing artificial intelligence availability. By means of internal tools, they enable worldwide innovation. GPipe tracks TensorFlow and other Google-made public projects. The company's open development policies help to level the playing field. Smaller labs get tools once only available to large companies. It advances equal research and experimentation. From any background, developers can create superior artificial intelligence models. The effects reach outside the classroom into practical uses.

Open-source tools empower developers, NGOs, and startups around the world. GPipe continues this trend with strong model-scaling capability. Its publication promotes honest debate about model parallelism. Public donations could also expose fresh use cases. Especially notable is Google's leadership in sharing AI infrastructure. AI develops quicker, and more tools become public. With GPipe, the direction of artificial intelligence evolution seems more inclusive and forceful. Everybody can create better solutions by sharing technologies.

GPipe is designed to run perfectly with TensorFlow. TensorFlow allows developers to implement GPipe quickly. Their sole need is a few wrapper operations. One can adjust current models without beginning from nothing. The popularity of TensorFlow makes this integration rather helpful. GPipe also supports some tools included in the TensorFlow ecosystem. Debugging, visualizing, and logging are exactly compatible. The tool also fits really nicely in manufacturing lines. It doesn't meddle with training schedules or special operations.

Users can run large models on either local or cloud GPUs with ease. Integration of clouds simplifies deployment greatly. Large model training does not now call for uncommon hardware configurations. For inference, GPipe can also cooperate with TensorFlow Serving. Google's design considers future compatibility. The codebase is clearly written and divided. Developers might fork their versions or offer improvements. This adaptability allows GPipe to flourish alongside society. It becomes a whole solution for deep learning scalability when coupled with TensorFlow.

GPipe lays a fresh benchmark for scalable artificial intelligence tools. It bridges the gap between hardware limitations and model complexity. High-performance training becomes accessible to researchers without high costs. Solutions from neural network training libraries today scale more readily than they did years ago. Open-source deep learning tool availability accelerates invention. The design of scalable machine learning architectures gets easier and more effective. The choice by Google AI creates opportunities for every developer. Thanks to tools like GPipe, deep learning has a better future. Combining clever design with wide accessibility helps to empower worldwide artificial intelligence expansion.

Statistical Process Control (SPC) Charts help businesses monitor, manage, and improve process quality with real-time data insights. Learn their types, benefits, and practical applications across industries

A former Pennsylvania coal plant is being redeveloped into an artificial intelligence data center, blending industrial heritage with modern technology to support advanced computing and machine learning models

Explore a detailed comparison of Neo4j vs. Amazon Neptune for data engineering projects. Learn about their features, performance, scalability, and best use cases to choose the right graph database for your system

How to compute vector embeddings with LangChain and store them efficiently using FAISS or Chroma. This guide walks you through embedding generation, storage, and retrieval—all in a simplified workflow

Volkswagen introduces its AI-powered self-driving technology, taking full control of development and redefining autonomous vehicle technology for safer, smarter mobility

How the Chain of Verification enhances prompt engineering for unparalleled accuracy. Discover how structured prompt validation minimizes AI errors and boosts response reliability

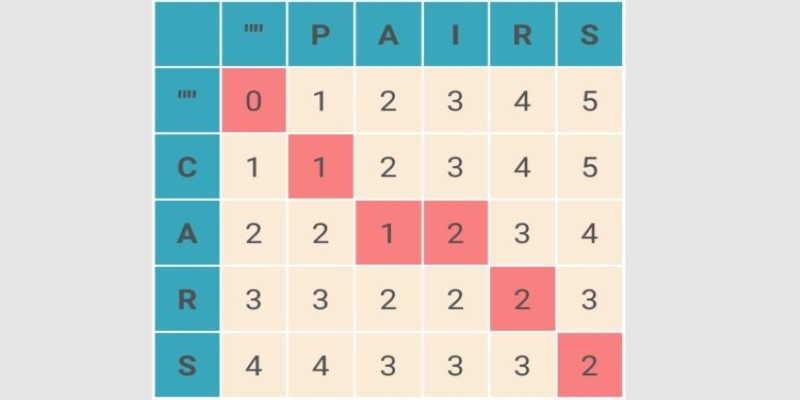

What Levenshtein Distance is and how it powers AI applications through string similarity, error correction, and fuzzy matching in natural language processing

Understand how TCL Commands in SQL—COMMIT, ROLLBACK, and SAVEPOINT—offer full control over transactions and protect your data with reliable SQL transaction control

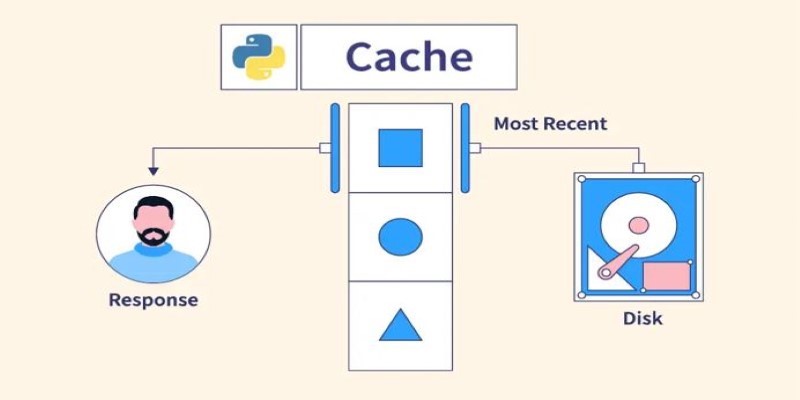

Understand what Python Caching is and how it helps improve performance in Python applications. Learn efficient techniques to avoid redundant computation and make your code run faster

Understand the real-world coding tasks ChatGPT can’t do. From debugging to architecture, explore the AI limitations in programming that still require human insight

IBM’s Project Debater lost debate; AI in public debates; IBM Project Debater technology; AI debate performance evaluation

Accessing Mistral NeMo opens the door to next-generation AI tools, offering advanced features, practical applications, and ethical implications for businesses looking to leverage powerful AI solutions