AI isn’t always about complex models or endless data. Sometimes, it’s about asking the right way. Think of training a new hire—show them a few solid examples, and they catch on fast. That’s the core of Few-Shot Prompting. Instead of giving an AI zero or just one example, you provide a handful—enough to show the format and intent.

This simple method makes a huge difference in how well the model understands and responds. It brings clarity, boosts accuracy, and improves results in tasks like summarization, translation, or analysis. Few-shot prompting turns clear examples into powerful, efficient AI communication.

Few-shot prompting feels less like engineering and more like coaching. You’re not reprogramming a language model or digging into its layers—you’re simply guiding it with a handful of examples. Think of it as showing a new team member how to do something by giving them a few solid references. No extra training, no system overhaul—just clear signals.

Here's how it works: you give the model a few examples of inputs paired with the outputs you expect. Then, you add a new input and let the model follow the same pattern. Say you're translating English into French. You'd include a couple of English sentences followed by their French equivalents. Once the model sees the structure, the trend continues. It's not learning French; it's spotting the format and mimicking it—like finishing a melody after hearing the first few notes.

Modern language models are built to be context-aware. They treat everything in the prompt as one continuous sequence, predicting the next part based on what came before. With a few strong examples, you're essentially shaping how the model thinks through your request.

But there's a catch: you're working within a limit. Too many or too long examples, and you run out of room for your actual prompt. That's why clarity and brevity matter so much.

Few-shot prompting is incredibly useful when labeled data is limited, the task isn’t easily defined, or you just want a fast, flexible way to interact with a model. In many cases, better prompts mean better results—no fine-tuning required.

The biggest advantage of few-shot prompting is its flexibility. You don’t need to retrain or fine-tune a model to get task-specific performance. Instead, you guide the model through context alone. This makes it ideal for rapid prototyping, custom task execution, and on-the-fly language generation. You can generate product descriptions, classify support tickets, extract data from text, or even simulate role-based conversations with minimal setup.

Another major benefit is that few-shot prompting tends to produce more consistent results than zero-shot prompting. When the model has no context, it often makes guesses that don’t match the format you had in mind. With few examples to anchor it, the model has a template to follow. This is particularly useful in Language Model Prompting for tasks like generating code snippets, answering questions in a specific style, or formatting responses for chatbots.

However, few-shot prompting has its challenges too. For one, you’re still working with a model that hasn’t truly “learned” your task. It’s mimicking a pattern, not adapting its behavior permanently. That means there’s room for drift—if your examples aren’t clear, the model may go off-script. You also run into issues when the task is too complex to capture in a few examples. For deeper reasoning or nuanced decision-making, prompt-only approaches may fall short.

Another limitation is token length. If you’re dealing with long-form input or multi-step reasoning, few-shot prompting might not leave enough room in the prompt to include everything you need. In such cases, breaking the task into smaller parts or using multi-turn prompting becomes necessary.

Despite these constraints, few-shot prompting remains a powerful technique because of its simplicity. You don’t need a custom-built model. You just need good examples—and that makes this method incredibly efficient for developers, researchers, and product teams alike.

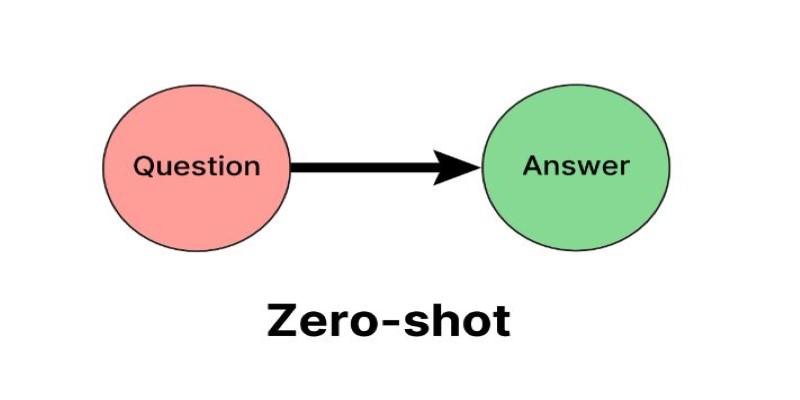

When comparing prompting strategies, it helps to think of them as points along a spectrum.

Zero-shot prompting assumes the model already understands the task. You simply tell it what to do without giving examples. This is efficient, but it often results in vague or inconsistent outputs, especially for unfamiliar tasks.

One-shot prompting provides a single example before the main request. This improves performance slightly, especially when the example is strong, but it doesn’t offer enough pattern variety for the model to generalize.

Few-shot prompting, in contrast, gives just enough variety to establish a reliable pattern. The model can now infer structure, tone, and logic. It’s not overkill like fine-tuning, where you train on thousands of examples and need a custom training pipeline. But it’s also not underpowered like zero-shot prompting, which leaves too much to chance.

There’s also chain-of-thought prompting, which sometimes overlaps with few-shot techniques. In this method, each example includes the reasoning process leading to the final answer. This works particularly well for complex tasks like math or logic problems. When combined with few-shot prompting, it enhances both performance and transparency.

So, while few-shot prompting isn’t the answer to every problem, it often hits the sweet spot: low effort, high impact. It’s particularly effective when you need speed, don’t have time for dataset curation, or just want to experiment with how models behave in different contexts.

Few-shot prompting shows that a handful of good examples can go a long way in guiding AI behavior. Instead of complex training or heavy coding, it relies on simple, well-structured prompts to teach models what to do. This approach mirrors how humans learn—with just a few cues, we understand patterns and apply them. It’s fast, efficient, and incredibly useful across many AI tasks. As language models become more capable, the way we prompt them matters more than ever. Few-shot prompting isn’t just a shortcut—it’s a practical strategy that makes interacting with AI smarter, smoother, and more predictable.

Accessing Mistral NeMo opens the door to next-generation AI tools, offering advanced features, practical applications, and ethical implications for businesses looking to leverage powerful AI solutions

Discover how DataRobot training empowers citizen data scientists with easy tools to boost data skills and workplace success

Stay updated with AV Bytes as it captures AI industry shifts and technological breakthroughs shaping the future. Explore how innovation, real-world impact, and human-centered AI are changing the world

Gen Z embraces AI in college but demands fair use, equal access, transparency, and ethical education for a balanced future

Can Germany's new AI self-driving test hub reshape the future of autonomous vehicles in Europe? Here's what the project offers and why it matters

Need to update your database structure? Learn how to add a column in SQL using the ALTER TABLE command, with examples, constraints, and best practices explained

Uncover the best Top 6 LLMs for Coding that are transforming software development in 2025. Discover how these AI tools help developers write faster, cleaner, and smarter code

Fujitsu AI-powered biometrics revealed at Mobile World Congress 2025 claims to predict crime before it happens, combining real-time behavioral data with AI. Learn how it works, where it’s tested, and the privacy concerns it raises

Google AI open-sourced GPipe, a neural network training library for scalable machine learning and efficient model parallelism

IBM’s Project Debater lost debate; AI in public debates; IBM Project Debater technology; AI debate performance evaluation

Wondering whether to use Streamlit or Gradio for your Python dashboard? Discover the key differences in setup, customization, use cases, and deployment to pick the best tool for your project

Gemma Scope is Google’s groundbreaking microscope for peering into AI’s thought process, helping decode complex models with unprecedented transparency and insight for developers and researchers