Artificial intelligence is now deeply embedded in our daily lives, powering everything from smartphones to healthcare systems. Yet, one big question remains — how do we truly understand what’s happening inside these complex AI models? Enter Gemma Scope, Google’s latest breakthrough tool designed to offer a clear view into AI’s thought process. Acting like a microscope for machine learning systems, Gemma Scope goes beyond surface-level outputs.

It uncovers the reasoning, decision paths, and hidden patterns within AI models. In a world increasingly shaped by artificial intelligence, tools like Gemma Scope are essential for ensuring transparency, trust, and responsible AI development.

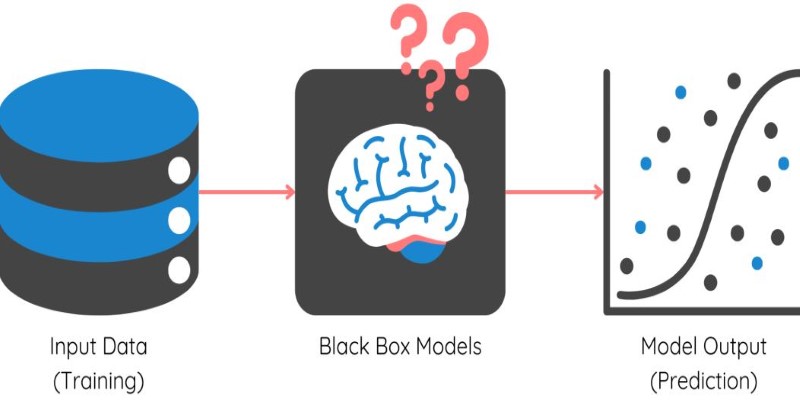

For years, one of the biggest concerns in the AI community has been the so-called "black box problem." Machine learning models, particularly deep neural networks, have grown so complex that even their creators often struggle to explain how they arrive at specific conclusions. This lack of transparency is not just a technical flaw—it’s a fundamental trust issue. Users, regulators, and even developers need to know why an AI system made a decision, especially in sensitive fields like medicine, law enforcement, or financial services.

Traditional approaches to AI interpretability often fell short because they were limited to surface-level observations. Developers could see input and output, but the inner workings—the paths the model followed, the decisions it weighted most heavily—remained obscured. This is where the concept of a "microscope for AI" started to take hold. The goal was to create tools that don’t just monitor but genuinely understand the layers of reasoning within AI systems. And that’s the gap Google set out to bridge with Gemma Scope.

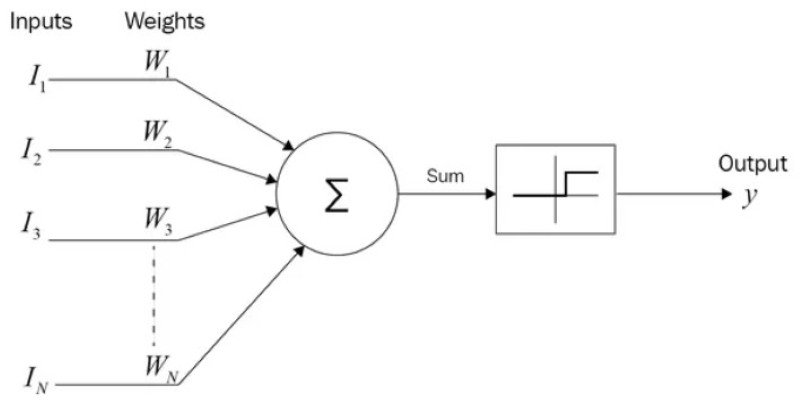

Gemma Scope is Google’s latest research initiative designed to demystify the internal thought processes of advanced AI models. It operates like a diagnostic tool, enabling engineers and researchers to trace the step-by-step evolution of decisions within a model. Rather than relying on simplified charts or prediction confidence scores, Gemma Scope dives deep into neural pathways, attention layers, and pattern recognition sequences that the AI uses to learn and act.

At its core, Gemma Scope integrates several advanced techniques from the fields of explainable AI (XAI), visualization, and model auditing. Its real power lies in providing dynamic visual representations of how models handle specific tasks. For example, if an AI model is tasked with image recognition, Gemma Scope can highlight the exact pixels, regions, or features the model focuses on while making its decision. Language models can show word associations, context weighting, and reasoning flows that lead to particular outputs.

This level of insight is groundbreaking because it doesn’t just provide a "snapshot" of AI behavior—it maps out the ongoing internal dialogue of the model. This allows developers not only to debug errors but also to understand strengths and weaknesses in how the AI learns.

Gemma Scope is poised to revolutionize several critical areas of AI research and development. First and foremost, it enhances model transparency, enabling organizations to create more ethically responsible AI systems. By understanding what features or biases an AI model relies on, developers can fine-tune datasets, adjust model parameters, and ensure that outcomes are not only accurate but also fair and unbiased.

Another vital application lies in safety and error detection. Complex AI systems occasionally make mistakes that seem baffling to human users. These errors often stem from misunderstood patterns in training data or overfitting to specific scenarios. Gemma Scope helps teams pinpoint exactly where these mistakes happen and why, allowing faster and more targeted interventions.

Additionally, Gemma Scope is crucial for compliance with emerging regulations on AI explainability. Across the globe, governments and regulatory bodies are pushing for laws that require companies to provide clear explanations for automated decisions, particularly when they affect people’s lives in significant ways. Tools like Gemma Scope could serve as the gold standard for meeting these legal and ethical obligations.

Moreover, the educational potential of Gemma Scope should not be overlooked. AI education often struggles with abstract concepts that are difficult to visualize. With its dynamic interface and detailed interpretability tools, Gemma Scope can serve as a teaching aid for students and professionals learning about machine learning and neural networks.

Google’s introduction of Gemma Scope signals a broader industry shift toward transparency and accountability in AI development. It represents a step away from treating machine learning models as untouchable monoliths and moves toward treating them as dynamic systems that must be understood and improved upon collaboratively.

As AI continues to evolve, the role of interpretability tools like Gemma Scope will only grow more critical. This is especially true as we move into an era where AI systems are responsible for increasingly autonomous decisions. From self-driving cars to automated healthcare diagnostics, the ability to explain and validate AI behavior is becoming a non-negotiable part of technology development.

Gemma Scope is also likely to inspire new research in the field of AI interpretability. While Google has taken the lead in creating this microscope for AI’s thought process, it opens the door for other companies and academic institutions to build upon its framework. Future versions of interpretability tools may go even deeper, incorporating real-time diagnostics, predictive modeling of errors, and automated auditing capabilities.

In this landscape, trust will become the ultimate currency of AI adoption. Users will not only demand smart systems—they will demand systems that can explain themselves. With Gemma Scope, Google has made a powerful statement about the future of AI: the age of the black box is coming to an end.

Google’s Gemma Scope marks a turning point in the journey toward transparent and accountable AI. By revealing the hidden layers of machine learning models, it gives developers and users a clearer understanding of how AI systems think and decide. This breakthrough tool not only enhances trust but also ensures fairness and ethical use of technology. As AI continues to shape our world, tools like Gemma Scope will be essential in building systems that are not only powerful but also explainable and human-centered.

Gain control over who can access and modify your data by understanding Grant and Revoke in SQL. This guide simplifies managing database user permissions for secure and structured access

Volkswagen introduces its AI-powered self-driving technology, taking full control of development and redefining autonomous vehicle technology for safer, smarter mobility

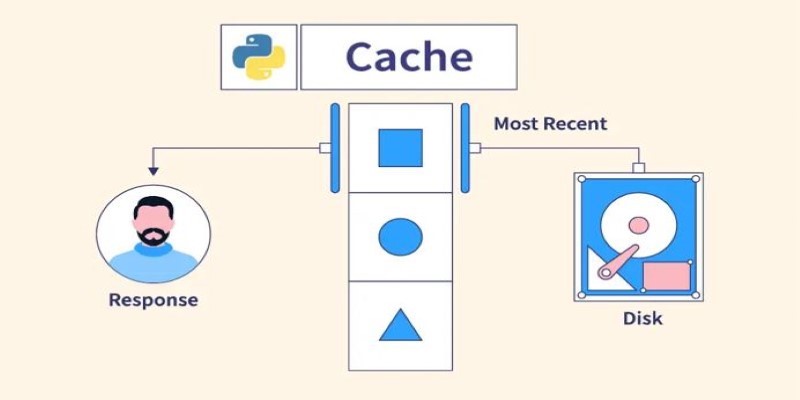

Understand what Python Caching is and how it helps improve performance in Python applications. Learn efficient techniques to avoid redundant computation and make your code run faster

What Hannover Messe 2025 has in store, from autonomous robots transforming manufacturing to generative AI driving innovation in industrial automation

Need to update your database structure? Learn how to add a column in SQL using the ALTER TABLE command, with examples, constraints, and best practices explained

How to compute vector embeddings with LangChain and store them efficiently using FAISS or Chroma. This guide walks you through embedding generation, storage, and retrieval—all in a simplified workflow

How the AI Robotics Accelerator Program is helping universities advance robotics research with funding, mentorship, and cutting-edge tools for students and faculty

How the ElevenLabs API powers voice synthesis, cloning, and real-time conversion for developers and creators. Discover practical applications, features, and ethical insights

Uncover the best Top 6 LLMs for Coding that are transforming software development in 2025. Discover how these AI tools help developers write faster, cleaner, and smarter code

How the SUMPRODUCT function in Excel can simplify your data calculations. This detailed guide explains its uses, structure, and practical benefits for smarter spreadsheet management

Can Germany's new AI self-driving test hub reshape the future of autonomous vehicles in Europe? Here's what the project offers and why it matters

Find out the key differences between SQL and Python to help you choose the best language for your data projects. Learn their strengths, use cases, and how they work together effectively