Advertisement

Prompt engineering is no longer just about clever wording or clean formatting. As AI enters high-stakes fields like legal summaries and medical analysis, the need for accurate, verifiable responses has become critical. That’s where the Chain of Verification changes the game. Instead of relying on a single prompt, this approach uses a structured series of prompts that validate and cross-check each other.

It introduces built-in feedback, turning prompt creation into a layered process. AI models still make mistakes, but this method builds a buffer against them. In this article, we’ll explore how this system leads to prompt engineering for unparalleled accuracy.

At its core, the Chain of Verification is a structured method of prompt creation that emphasizes built-in feedback loops. Rather than sending a single prompt to an AI model and accepting the response at face value, this method introduces multiple interdependent prompts, each acting as a validator for the last.

Think of it like writing an essay and then handing it to three different editors before publishing. One checks for factual accuracy. Another for tone and clarity. A third compares the final result with the original goal. Each layer adds a layer of accountability.

In prompt engineering terms, you might start with a base prompt to generate a response, then follow up with a second prompt that fact-checks that response. A third might compare the output to a dataset or context reference. Finally, a fourth prompt may rank or revise the entire response chain. Together, this chain doesn’t just ensure the output is polished—it confirms that it’s aligned with your goals and backed by validation.

What makes this approach powerful is that it doesn’t require complex code. It’s a design philosophy. Even using natural language, you can script these verification steps into your prompt flow. It’s modular, scalable, and more transparent than blindly stacking instructions in a mega-prompt.

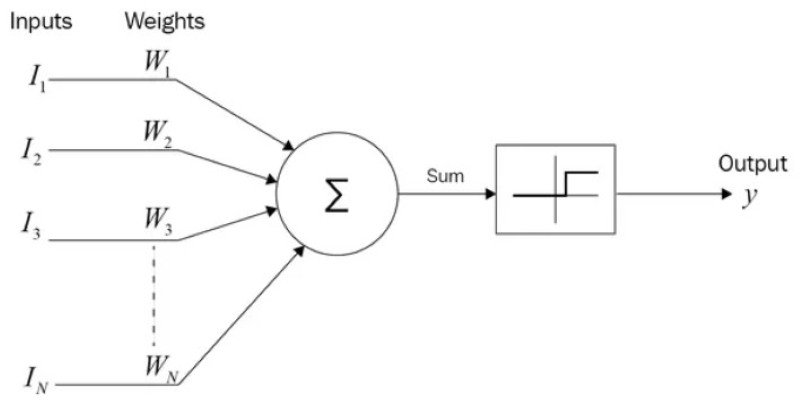

Large language models don’t know anything. They don’t reason or validate the way humans do. What they offer is probability—they generate the next best token based on patterns in massive datasets. That’s powerful, but it’s also their flaw. They can sound confident while being entirely wrong. This is where hallucinations creep in: outputs that sound right but are completely false.

This is where prompt engineering for unparalleled accuracy meets reality. The more critical the task—medical insights, code generation, legal interpretation—the more important it is that the AI’s response isn’t just fluent but verified.

The Chain of Verification confronts these problems head-on. Each link in the chain serves a different function: fact-checking, context re-alignment, assumption highlighting, and contradiction spotting. For example, in a legal use case, you might use a first prompt to summarize a contract, a second to flag missing clauses, and a third to compare it with known templates. Each output doesn't just add value—it checks the last one for accuracy and relevance.

What's different about this approach isn't just the tools—it's the mindset. You assume the model will get things wrong. Then, you build layers to catch those mistakes. You aren't trying to outsmart the model. You're designing guardrails that guide it back to the truth.

That’s the big shift. Old-school prompt engineering was about expressing what you wanted. Chain of Verification prompt engineering is about building a system that checks whether you got what you asked for.

The Chain of Verification isn’t just theory. It’s being adopted—quietly but widely—in fields where precision is non-negotiable. Let’s walk through a few practical examples where this method is already showing its value.

Academic institutions now use language models to condense long research papers, but not without checks. One model generates a summary. Another verifies citation accuracy, and a third flags data misinterpretation or statistical bias. This Chain of Verification ensures that the final summary isn't just concise but credible. By layering validation steps, universities can trust that the summarized content maintains academic rigor and preserves the integrity of the original research.

Financial firms leverage the Chain of Verification to reduce errors in investment risk assessments. An initial prompt gathers relevant market data. A second prompt checks source reliability and a third evaluates risk levels using a historical pattern comparison. This process doesn't just generate insights—it justifies them. Each layer strengthens confidence in the outcome, making the AI-generated analysis more accurate, audit-ready, and aligned with regulatory expectations.

Software teams increasingly rely on AI to create documentation and code snippets. A Chain of Verification ensures these outputs meet production standards. One model writes the code or guide; another reviews it for errors or clarity, and a third checks for deprecated functions or security flaws. This layered review process makes outputs safer and more reliable. What begins as a prototype evolves into a polished, publishable resource that developers can actually trust.

What links all these use cases together is trust. These organizations aren’t hoping for the best—they’re engineering systems that minimize error and increase clarity. And that’s a direct benefit of verification chaining.

The Chain of Verification isn’t just a technique—it's a mindset shift in how we design and trust AI systems. By embedding validation steps within our prompts, we turn guesswork into a structured process that holds each output accountable. This doesn't eliminate AI errors, but it significantly reduces them by catching inconsistencies early. For anyone aiming to use AI in critical environments, this approach builds confidence and reliability. Prompt engineering for unparalleled accuracy starts with recognizing that precision comes from process, not just creativity. As AI continues to grow in capability, this layered verification may become the standard for producing trustworthy results.

Advertisement

Google AI open-sourced GPipe, a neural network training library for scalable machine learning and efficient model parallelism

Statistical Process Control (SPC) Charts help businesses monitor, manage, and improve process quality with real-time data insights. Learn their types, benefits, and practical applications across industries

How to use MongoDB with Pandas, NumPy, and PyArrow in Python to store, analyze, compute, and exchange data effectively. A practical guide to combining flexible storage with fast processing

Discover how DataRobot training empowers citizen data scientists with easy tools to boost data skills and workplace success

Volkswagen introduces its AI-powered self-driving technology, taking full control of development and redefining autonomous vehicle technology for safer, smarter mobility

Stay updated with AV Bytes as it captures AI industry shifts and technological breakthroughs shaping the future. Explore how innovation, real-world impact, and human-centered AI are changing the world

Gain control over who can access and modify your data by understanding Grant and Revoke in SQL. This guide simplifies managing database user permissions for secure and structured access

How the Chain of Verification enhances prompt engineering for unparalleled accuracy. Discover how structured prompt validation minimizes AI errors and boosts response reliability

Learn Apache Storm fundamentals with this detailed guide covering architecture, key concepts, and real-world use cases. Perfect for mastering real-time stream processing at scale

How the SUMPRODUCT function in Excel can simplify your data calculations. This detailed guide explains its uses, structure, and practical benefits for smarter spreadsheet management

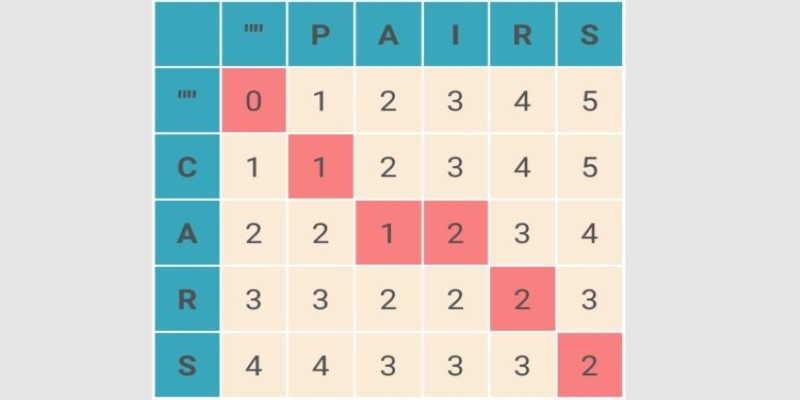

What Levenshtein Distance is and how it powers AI applications through string similarity, error correction, and fuzzy matching in natural language processing

How the AI Robotics Accelerator Program is helping universities advance robotics research with funding, mentorship, and cutting-edge tools for students and faculty